NOTE:

The older 32 GB capacity RDIMM memory with x4 data width and 8Gb DRAM density cannot be mixed with the

newer 32GB capacity RDIMM memory with x8 data width and 16Gb DRAM density in the same AMD EPYC™ processor unit.

NOTE:

The older 128GB capacity LRDIMM memory at 2666 MT/s speed cannot be mixed with the new 128GB capacity

LRDIMM memory at 3200 MT/s speed.

General memory module installation guidelines

To ensure optimal performance of your system, observe the following general guidelines when configuring your system memory.

If your system's memory configurations fail to observe these guidelines, your system might not boot, stop responding during

memory configuration, or operate with reduced memory. This section provides information on the memory population rules and

about the non-uniform memory access (NUMA) for single or dual processor system.

The memory bus may operate at speeds of 3200 MT/s, 2933 MT/s, or 2666 MT/s depending on the following factors:

●

System profile selected (for example, Performance Optimized, or Custom [can be run at high speed or lower])

●

Maximum supported DIMM speed of the processors

●

Maximum supported speed of the DIMMs

NOTE:

MT/s indicates DIMM speed in MegaTransfers per second.

The system supports Flexible Memory Configuration, enabling the system to be configured and run in any valid chipset

architectural configuration. The following are the recommended guidelines for installing memory modules:

●

All DIMMs must be DDR4.

●

Mixing of memory module capacities in a system is not supported.

●

If memory modules with different speeds are installed, they operate at the speed of the slowest installed memory module(s).

●

Populate memory module sockets only if a processor is installed.

○

For single-processor systems, sockets A1 to A16 are available.

○

For dual-processor systems, sockets A1 to A16 and sockets B1 to B16 are available.

○

In Optimizer Mode, the DRAM controllers operate independently in the 64-bit mode and provide optimized memory

performance.

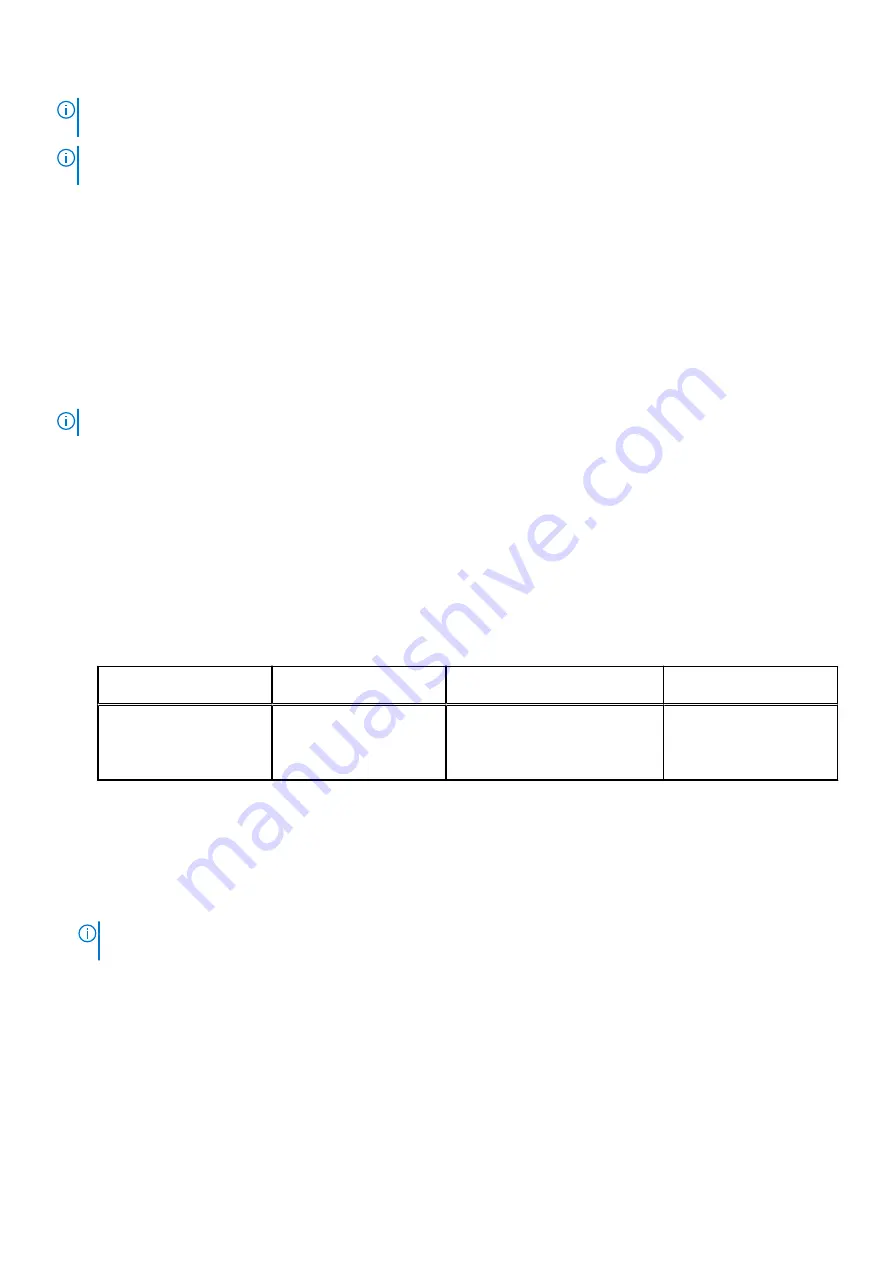

Table 12. Memory population rules

Processor

Configuration

Memory population

Memory population

information

Dual processor (Start

with processor1. Processor

1 and processor 2

population should match)

Optimizer (Independent

channel) population order

A{1}, B{1}, A{2}, B{2}, A{3}, B{3},

A{4}, B{4}, A{5}, B{5}, A{6},

B{6}, A{7}, B{7} A{8}, B{8}

Odd amount of DIMMs

per processor is allowed.

DIMMs must be populated

identically per processor.

●

Populate all the sockets with white release tabs first, followed by the black release tabs.

●

In a dual-processor configuration, the memory configuration for each processor must be identical. For example, if you

populate socket A1 for processor 1, then populate socket B1 for processor 2, and so on.

●

Unbalanced or odd memory configuration results in a performance loss and system may not identify the memory modules

being installed, so always populate memory channels identically with equal DIMMs for best performance.

●

Minimum recommended configuration is to populate four equal memory modules per processor. AMD recommends limiting

processors in that system to 32 cores or less.

●

Populate eight equal memory modules per processor (one DIMM per channel) at a time to maximize performance.

NOTE:

Equal memory modules refer to DIMMs with identical electrical specification and capacity that may be from

different vendors.

Memory interleaving with Non-uniform memory access (NUMA)

Non-uniform memory access (NUMA) is a memory design used in multi-processing, where the memory access time depends on

the memory location relative to the processor. In NUMA, a processor can access its own local memory faster than the non-local

memory.

NUMA nodes per socket (NPS) is a new feature added that allows you to configure the memory NUMA domains per socket.

The configuration can consist of one whole domain (NPS1), two domains (NPS2), or four domains (NPS4). In the case of a

two-socket platform, an additional NPS profile is available to have whole system memory to be mapped as single NUMA domain

(NPS0). For more information on the memory interleaving for NPSx, see the Memory interleaving population rules section in this

topic.

BIOS implementation for NPSx

Installing and removing system components

65