52

IBM Power System E850C: Technical Overview and Introduction

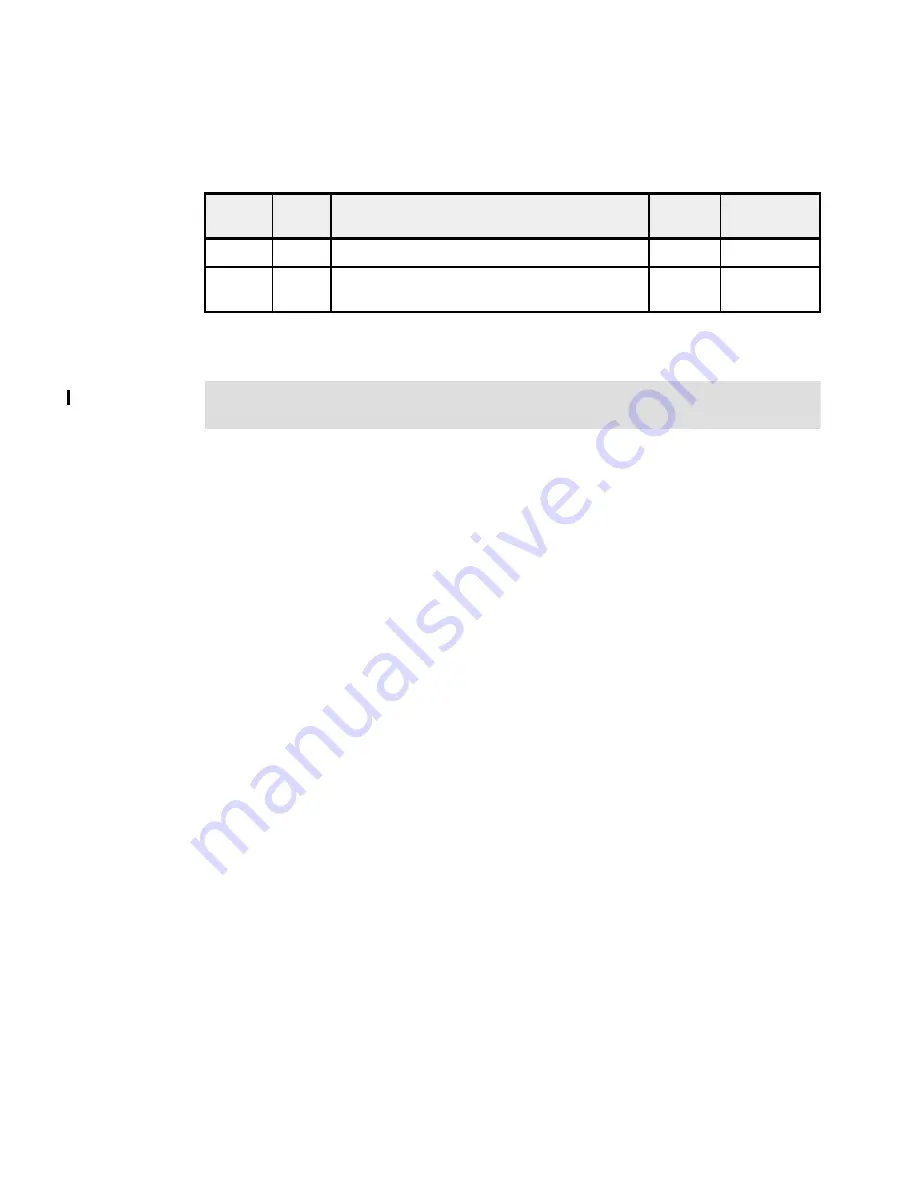

Table 2-20 lists the available FCoE adapters. They are high-performance Converged Network

Adapters (CNAs) that use SR optics. Each port can simultaneously provide network interface

card (NIC) traffic and Fibre Channel functions.

Table 2-20 Available FCoE adapters

For more information about FCoE, see

An Introduction to Fibre Channel over Ethernet, and

Fibre Channel over Convergence Enhanced Ethernet, REDP-4493.

2.9.7 InfiniBand Host Channel adapter

The InfiniBand Architecture (IBA) is an industry-standard architecture for server I/O and

inter-server communication. It was developed by the InfiniBand Trade Association (IBTA) to

provide the levels of reliability, availability, performance, and scalability that are necessary for

present and future server systems with levels better than can be achieved by using

bus-oriented I/O structures.

InfiniBand is an open set of interconnect standards and specifications. The main InfiniBand

specification is published by the IBTA and is available at the following website:

InfiniBand is based on a switched fabric architecture of serial point-to-point links, where these

InfiniBand links can be connected to either host channel adapters (HCAs), which are used

primarily in servers, or target channel adapters (TCAs), which are used primarily in storage

subsystems.

The InfiniBand physical connection consists of multiple byte lanes. Each individual byte lane

is a four-wire, 2.5, 5.0, or 10.0 Gbps bidirectional connection. Combinations of link width and

byte lane speed allow for overall link speeds of 2.5 - 120 Gbps. The architecture defines a

layered hardware protocol and a software layer to manage initialization and the

communication between devices. Each link can support multiple transport services for

reliability and multiple prioritized virtual communication channels.

For more information about InfiniBand, see

HPC Clusters Using InfiniBand on IBM Power

Systems Servers, SG24-7767.

A connection to supported InfiniBand switches is accomplished by using the QDR optical

cables #3290 and #3293.

Feature

Code

CCIN

Description

Max per

system

OS support

EN0H

2B93

PCIe2 4-port (10 Gb FCoE & 1 GbE) SR & RJ45

50

AIX, Linux

EN0K

2CC1

PCIe2 4-port (10 Gb FCoE & 1 GbE) SFP+Copper

& RJ45

50

AIX, Linux

Note: Adapters #EN0H, and #EN0K, support SR-IOV when minimum firmware and

software levels are met.

Summary of Contents for E850C

Page 2: ......

Page 36: ...22 IBM Power System E850C Technical Overview and Introduction...

Page 114: ...100 IBM Power System E850C Technical Overview and Introduction...

Page 154: ...140 IBM Power System E850C Technical Overview and Introduction...

Page 158: ...144 IBM Power System E850C Technical Overview and Introduction...

Page 159: ......

Page 160: ...ibm com redbooks Printed in U S A Back cover ISBN 0738455687 REDP 5412 00...