Chapter 4

Analog Input

©

National Instruments Corporation

4-3

16-bit ADC converts analog inputs into one of 65,536 (= 2

16

) codes—that

is, one of 65,536 possible digital values. These values are spread fairly

evenly across the input range. So, for an input range of –10 V to 10 V, the

voltage of each code of a 16-bit ADC is:

M Series devices use a calibration method that requires some codes

(typically about 5% of the codes) to lie outside of the specified range. This

calibration method improves absolute accuracy, but it increases the nominal

resolution of input ranges by about 5% over what the formula shown above

would indicate.

Choose an input range that matches the expected input range of your signal.

A large input range can accommodate a large signal variation, but reduces

the voltage resolution. Choosing a smaller input range improves the voltage

resolution, but may result in the input signal going out of range.

For more information on programming these settings, refer to the

NI-DAQmx Help

or the

LabVIEW 8.x Help

.

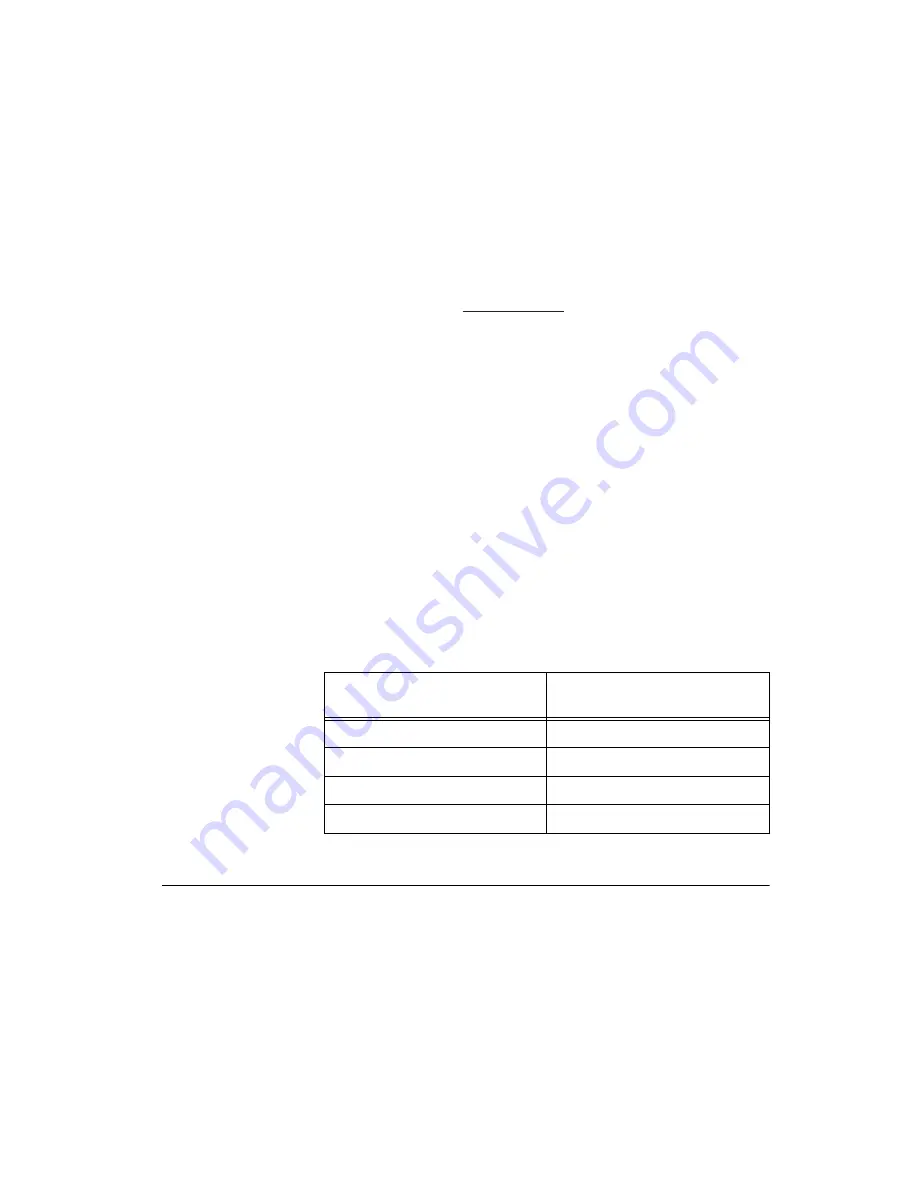

Table 4-1 shows the input ranges and resolutions supported by the

NI 6232/6233 device.

Analog Input Ground-Reference Settings

NI 6232/6233 devices support the analog input ground-reference settings

shown in Table 4-2.

Table 4-1.

Input Ranges for NI 6232/6233

Input Range

Nominal Resolution Assuming

5% Over Range

–10 V to 10 V

320

μ

V

–5 V to 5 V

160

μ

V

–1 V to 1 V

32

μ

V

–200 mV to 200 mV

6.4

μ

V

(10 V – (–10 V))

2

16

= 305 mV