2. Determine the file system name. On the primary MGMT node:

[admin@sn11000n000 ~]$

cscli fs_info

Examples show a file system named

sn11000

:

[admin@sn11000n000 ~]$

cscli fs_info

----------------------------------------------------------------------------------------------------

OST Redundancy style: Declustered Parity (GridRAID)

Disk I/O Integrity guard (ANSI T10-PI) is not supported by hardware

----------------------------------------------------------------------------------------------------

Information about "sn11000" file system:

----------------------------------------------------------------------------------------------------

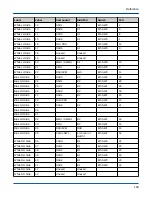

Node Role Targets Failover partner Devices

----------------------------------------------------------------------------------------------------

sn11000n000 mgmt 1 / 0 sn11000n001

sn11000n001 mgmt 1 / 0 sn11000n000

sn11000n002 mgs 0 / 1 sn11000n003 /dev/md65

sn11000n003 mds 0 / 1 sn11000n002 /dev/md66

sn11000n004 oss 0 / 1 sn11000n005 /dev/md0

sn11000n005 oss 0 / 1 sn11000n004 /dev/md1

3. If the output in the previous command indicates that the Lustre file system is not started (e.g., the

Targets

column shows

0 / 1

instead of

1 / 1

for OSS nodes), start the Lustre file system:

[admin@sn11000n000 ~]$

cscli mount -f testfs

mount: MGS is starting...

mount: MGS is started!

mount: testfs is started on sn11000n002,sn11000n003!

mount: testfs is started on sn11000n004,sn11000n005!

mount: All start commands for filesystem testfs were sent.

mount: Use "cscli show_nodes" to see mount status.

[admin@sn11000n000 ~]$

cscli show_nodes

--------------------------------------------------------------------------------

---------

Hostname Role Power State Service State Targets HA Partner HA

Resources

--------------------------------------------------------------------------------

---------

sn11000n000 MGMT On Started 1 / 0 sn11000n001 Local

sn11000n001 MGMT On Started 1 / 0 sn11000n000 Local

sn11000n002 MDS,MGS On Started 1 / 1 sn11000n003 Local

sn11000n003 MDS,MGS On Started 1 / 1 sn11000n002 Local

sn11000n004 OSS On Started 1 / 1 sn11000n005 Local

sn11000n005 OSS On Started 1 / 1 sn11000n004 Local

--------------------------------------------------------------------------------

---------

4. Log in to the secondary MGMT node:

[MGMT0]$

ssh -l admin secondary_MGMT_node

5. Change to root user on the secondary MGMT node:

[MGMT1]$

sudo su -

6. Obtain the Lustre network identifier (NIDs) of each MGS node (typically n002 and n003:

[MGMT1]#

ssh n002 lctl list_nids

[MGMT1]#

ssh n003 lctl list_nids

Perform the First-Run Configuration

88